Eyes on the Road

Austin Russell hops on a motorized cart. Then he goes whizzing through a cavernous

cavernous

KLARA FOITLOVA—CTK/AP

looking like a cavern; large and hollow

(adjective)

The artwork was displayed in a cavernous gallery.

building on the edge of San Francisco Bay, in California. As the 22-year-old rides along, he passes a mannequin, a tire, and a coworker on a bicycle. They are all part of a demonstration. He wants to show how well his company’s sensor

sensor

KLARA FOITLOVA—CTK/AP

looking like a cavern; large and hollow

(adjective)

The artwork was displayed in a cavernous gallery.

building on the edge of San Francisco Bay, in California. As the 22-year-old rides along, he passes a mannequin, a tire, and a coworker on a bicycle. They are all part of a demonstration. He wants to show how well his company’s sensor

sensor

ALEX WANG—GETTY IMAGES

a device that detects, or senses, something like light, heat, motion, or physical pressure, and responds by transmitting a signal

(noun)

A light goes on when the sensor picks up motion.

can monitor its environment. On a screen near the sensor, the cart and the other shapes appear in rainbow colors. The colors represent exactly how far away they are. All of it is the result of laser beams. They shoot out of a black box and bounce off more than a million points around the room every second.

ALEX WANG—GETTY IMAGES

a device that detects, or senses, something like light, heat, motion, or physical pressure, and responds by transmitting a signal

(noun)

A light goes on when the sensor picks up motion.

can monitor its environment. On a screen near the sensor, the cart and the other shapes appear in rainbow colors. The colors represent exactly how far away they are. All of it is the result of laser beams. They shoot out of a black box and bounce off more than a million points around the room every second.

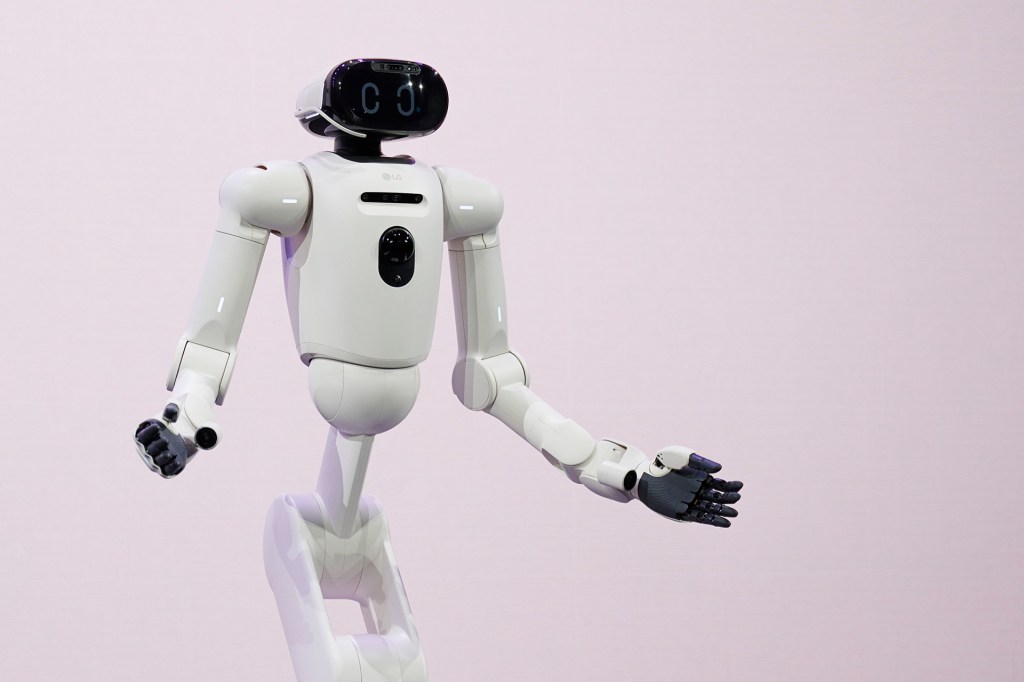

Russell is the head of a company called Luminar. The company is building a sensor to help autonomous

autonomous

JAE C. HONG—AP

having autonomy, or the power to do things independently

(adjective)

At the restaurant, customers use an autonomous system to place orders.

vehicles see. Autonomous vehicles can drive themselves without the help of a human. “It’s easy to make an autonomous vehicle that works 99% of the time,” Russell says. “But the challenge is in that last 1% of all the different edge cases that can happen.”

JAE C. HONG—AP

having autonomy, or the power to do things independently

(adjective)

At the restaurant, customers use an autonomous system to place orders.

vehicles see. Autonomous vehicles can drive themselves without the help of a human. “It’s easy to make an autonomous vehicle that works 99% of the time,” Russell says. “But the challenge is in that last 1% of all the different edge cases that can happen.”

Edge cases could mean anything from a sudden snowstorm to pedestrians overflowing onto the street. For self-driving cars to become a reality, vehicles must “see not just some of the objects some of the time but all of the objects all the time,” Russell says. He is working on a technology known as lidar. Lidar is a short name for “light detection and ranging.”

No Single Solution

New cars might have multiple sensors already. But each type of sensor has issues. For example, cameras are helpful for backing up. But they don’t work well in snow or darkness. Radar sensors keep cars at a safe distance from other cars. But they are better at detecting metal than soft stuff, like humans.

Until now, lidar has been too expensive for most people to use. New companies, called startups, are racing to make lidar cheaper. But cheaper lidar must provide a high-quality result for use in a driverless car.

Robot cars might never get tired or have a fit of road rage like humans do. Still, “there is no technology that can match the amazing ability of the human eye [and] brain to comprehend a car’s surroundings. The closest we can get is with lidar,” Yaron Toren writes in an email. He is the vice president of a startup called Oryx.

Elon Musk is the owner of Tesla. He has argued that advanced radar could do the same job as lidar. Other startups are working on super-powered cameras to help cars see more clearly. Experts say autonomous vehicles will almost certainly have a mix of sensors, just like people. “I wouldn’t want to trust only one sense,” Andy Petersen says. He is a hardware expert at the Virginia Tech Transportation Institute. “There are ways you can fool any one of those sensors. It would be hard to fool them all.”